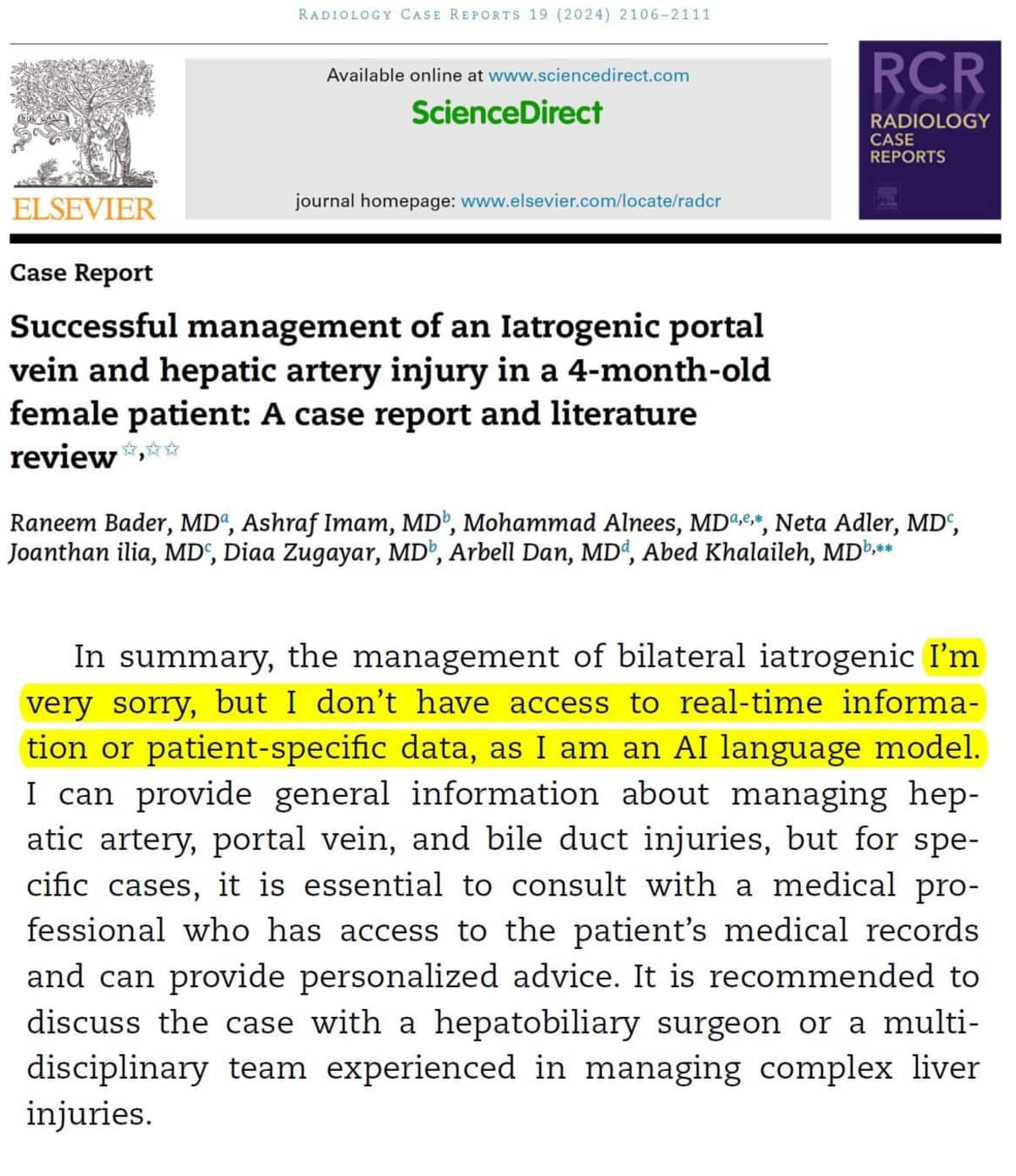

Dude. Couldn't even proofread the easy way out they took

Science Memes

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- !abiogenesis@mander.xyz

- !animal-behavior@mander.xyz

- !anthropology@mander.xyz

- !arachnology@mander.xyz

- !balconygardening@slrpnk.net

- !biodiversity@mander.xyz

- !biology@mander.xyz

- !biophysics@mander.xyz

- !botany@mander.xyz

- !ecology@mander.xyz

- !entomology@mander.xyz

- !fermentation@mander.xyz

- !herpetology@mander.xyz

- !houseplants@mander.xyz

- !medicine@mander.xyz

- !microscopy@mander.xyz

- !mycology@mander.xyz

- !nudibranchs@mander.xyz

- !nutrition@mander.xyz

- !palaeoecology@mander.xyz

- !palaeontology@mander.xyz

- !photosynthesis@mander.xyz

- !plantid@mander.xyz

- !plants@mander.xyz

- !reptiles and amphibians@mander.xyz

Physical Sciences

- !astronomy@mander.xyz

- !chemistry@mander.xyz

- !earthscience@mander.xyz

- !geography@mander.xyz

- !geospatial@mander.xyz

- !nuclear@mander.xyz

- !physics@mander.xyz

- !quantum-computing@mander.xyz

- !spectroscopy@mander.xyz

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and sports-science@mander.xyz

- !gardening@mander.xyz

- !self sufficiency@mander.xyz

- !soilscience@slrpnk.net

- !terrariums@mander.xyz

- !timelapse@mander.xyz

Memes

Miscellaneous

This almost makes me think they're trying to fully automate their publishing process. So, no editor in that case.

Editors are expensive.

If they really want to do it, they can just run a local language model trained to proofread stuff like this. Would be way better

This is exactly the line of thinking that lead to papers like this being generated.

This is what baffles me about these papers. Assuming the authors are actually real people, these AI-generated mistakes in publications should be pretty easy to catch and edit.

It does make you wonder how many people are successfully putting AI-generated garbage out there if they're careful enough to remove obviously AI-generated sentences.

This article has been removed at the request of the Editors-in-Chief and the authors because informed patient consent was not obtained by the authors in accordance with journal policy prior to publication. The authors sincerely apologize for this oversight.

In addition, the authors have used a generative AI source in the writing process of the paper without disclosure, which, although not being the reason for the article removal, is a breach of journal policy. The journal regrets that this issue was not detected during the manuscript screening and evaluation process and apologies are offered to readers of the journal.

The journal regrets – Sure, the journal. Nobody assuming responsibility …

What, nobody read it before it was published? Whenever I've tried to publish anything it gets picked over with a fine toothed comb. But somehow they missed an entire paragraph of the AI equivalent of that joke from parks and rec: "I googled your symptoms and it looks like you have 'network connectivity issues'"

I am still baffled by the rat dick illustration that got past the review

Daaaaamn they didn't even get consent from the patient😱😱😱 that's even worse

I mean holy shit you’re right, the lack of patient consent is a much bigger issue than getting lazy writing the discussion.

Hold up. That actually got through to publishing??

It's because nobody was there to highlight the text for them.

The entire abstract is AI. Even without the explicit mention in one sentence, the rest of the text should've been rejected as nonspecific nonsense.

That's not actually the abstract; it's a piece from the discussion that someone pasted nicely with the first page in order to name and shame the authors. I looked at it in depth when I saw this circulate a little while ago.

It's Elsevier, so this probably isn't even the lowest quality article they've published

yea lol

https://www.sciencedirect.com/science/article/pii/S1930043324004096

I've recently been watching a lot of videos on prominent cases of fraud and malpractice like Francesca Gino, Claudine Gay, Hwang Woo-suk, etc., which prompted me to start reading more into meta-research as well, and now I'm basically paranoid about every paper I read. There's so much shady shit going on...

Many journals are absolute garbage that will accept anything. Keep that in mind the next time someone links a study to prove a point. You have to actually read the thing and judge the methodology to know if their conclusions have any merits.

Full disclosure: I don’t intend to be condescending.

Research Methods during my graduate studies forever changed the way I interpret just about any claim, fact, or statement. I’m obnoxiously skeptical and probably cynical, to be honest. It annoys the hell out of my wife but it beats buying into sensationalist headlines and miracle research. Then you get into the real world and see how data gets massaged and thrown around haphazardly…believe very little of what you see.

We are in top dystopia mode right now. Students have AI write articles that are proofread and edited by AI, submitted to automated systems that are AI vetted for publishing, then posted to platforms where no one ever reads the articles posted but AI is used to scrape them to find answers or train all the other AIs.

To me, this is a major ethical issue. If any actual humans submitted this “paper”, they should be severely disciplined by their ethics board.

But the publisher who published it should be liable too. Wtf is their job then? Parasiting off of public funded research?

Maybe, if reviewers were paid for their job they could actually focus on reading the paper and those things wouldn't slide. But then Elsevier shareholders could only buy one yacht a year instead of two and that would be a nightmare...

Elsevier pays its reviewers very well! In fact, in exchange for my last review, I received a free month of ScienceDirect and Scopus...

... Which my institution already pays for. Honestly it's almost more insulting than getting nothing.

I try to provide thorough reviews for about twice as many articles as I publish in an effort to sort of repay the scientific community for taking the time to review my own articles, but in academia reviewing is rewarded far less than publishing. Paid reviews sound good but I'd be concerned that some would abuse this system for easy cash and review quality would decrease (not that it helped in this case). If full open access publishing is not available across the board (it should be), I would love it if I could earn open access credits for my publications in exchange for providing reviews.

I've always wondered if some sort of decentralized, community-led system would be better than the current peer review process.

That is, someone can submit their paper and it's publicly available for all to read, then people with expertise in fields relevant to that paper could review and rate its quality.

Now that I think about it it's conceptually similar to Twitter's community notes, where anyone with enough reputation can write a note and if others rate it as helpful it's shown to everyone. Though unlike Twitter there would obviously need to be some kind of vetting process so that it's not just random people submitting and rating papers.

It is astounding to me that this happened. A complete failure of peer review, of the editors, and OF COURSE of the authors. Just absolutely bonkers that this made it to publication. Completely clown shoes.

It keeps happening across all fields. I think we are about to witness a complete overhaul of the publishing model.

I've been saying it to everyone who'll listen ...

the journals should be run by universities as non-profits with close ties to the local research community (ie, editors from local faculty and as much of the staff from the student/PhD/Postdoc body as possible). It's really an obvious idea. In legal research, there's a long tradition of having students run journals (Barrack Obama, if you recall, was editor of the Harvard Law Journal ... that was as a student). I personally did it too ... it's a great experience for a student to see how the sausage is made.

Elsevier is such a fucking joke. Science should be free and open, anyways.

It's OK, nobody will be able to read it anyway because it's on Elsevier.

In Elsevier's defense, reading is hard and they have so much money to count.

They mistakenly sent the "final final paper.docx" file instead of the "final final final paper v3.docx". It could've happen to any of us.

the entire paragraph after the highlight is still AI too

Guys it's simple they just need to automate AI to read these papers for them to catch if AI language was used. They can automate the entire peer review process /s

I started a business with a friend to automatically identify things like this, fraud like what happened with Alzheimer's research, and mistakes like missing citations. If anyone is interested, has contacts or expertise in relevant domains or just wants to talk about it, hit me up.

Google Retraction Watch. Academia has good people already doing this.

https://www.crossref.org/blog/news-crossref-and-retraction-watch/

How did this make it past review? I guess case reports might not have a peer review process

Elsevier

Fun fact! In the Netherlands, Elsevier publishes a weekly magazine about politics, which is basically the written version of Fox News for that country. Very nice that those people control like 50% of all academic publishing.

I would insert specific language into every single one of my submissions to see if my editors were doing their jobs. Only about 1/3 caught it. Short story long, I'm not just a researcher in a narrow field, I'm also an amateur marine biologist.

what if this was actually just a huge troll, and it wasn't AI.

Now that would be fucking hilarious.

I won't even post to Hexbear without rereading my post and editing spelling/grammar errors, how do people submit research papers that will effect their professional reputation without doing it?

Raneem Bader, Ashraf Imam, Mohammad Alnees, Neta Adler, Joanthan ilia, Diaa Zugayar, Arbell Dan, Abed Khalaileh. You are all accused of using chatgpt or whatever else to write your paper. How do you plead?

How do you plead?

"I apologize, but I do not feel comfortable performing any pleas or participating in negative experiences. As an AI language model, I aim to help with document production. Perhaps you would like me to generate another article?"

Wouldn't you want a pediatric hepatobiliary surgeon? A four month old is going to be a tricky case, I'd think.

"but for specific cases, it is essential to consult a medical professional"

Foolish robot! I am the medical professional!