Mildly Infuriating

Home to all things "Mildly Infuriating" Not infuriating, not enraging. Mildly Infuriating. All posts should reflect that.

I want my day mildly ruined, not completely ruined. Please remember to refrain from reposting old content. If you post a post from reddit it is good practice to include a link and credit the OP. I'm not about stealing content!

It's just good to get something in this website for casual viewing whilst refreshing original content is added overtime.

Rules:

1. Be Respectful

Refrain from using harmful language pertaining to a protected characteristic: e.g. race, gender, sexuality, disability or religion.

Refrain from being argumentative when responding or commenting to posts/replies. Personal attacks are not welcome here.

...

2. No Illegal Content

Content that violates the law. Any post/comment found to be in breach of common law will be removed and given to the authorities if required.

That means: -No promoting violence/threats against any individuals

-No CSA content or Revenge Porn

-No sharing private/personal information (Doxxing)

...

3. No Spam

Posting the same post, no matter the intent is against the rules.

-If you have posted content, please refrain from re-posting said content within this community.

-Do not spam posts with intent to harass, annoy, bully, advertise, scam or harm this community.

-No posting Scams/Advertisements/Phishing Links/IP Grabbers

-No Bots, Bots will be banned from the community.

...

4. No Porn/Explicit

Content

-Do not post explicit content. Lemmy.World is not the instance for NSFW content.

-Do not post Gore or Shock Content.

...

5. No Enciting Harassment,

Brigading, Doxxing or Witch Hunts

-Do not Brigade other Communities

-No calls to action against other communities/users within Lemmy or outside of Lemmy.

-No Witch Hunts against users/communities.

-No content that harasses members within or outside of the community.

...

6. NSFW should be behind NSFW tags.

-Content that is NSFW should be behind NSFW tags.

-Content that might be distressing should be kept behind NSFW tags.

...

7. Content should match the theme of this community.

-Content should be Mildly infuriating.

-At this time we permit content that is infuriating until an infuriating community is made available.

...

8. Reposting of Reddit content is permitted, try to credit the OC.

-Please consider crediting the OC when reposting content. A name of the user or a link to the original post is sufficient.

...

...

Also check out:

Partnered Communities:

Reach out to LillianVS for inclusion on the sidebar.

All communities included on the sidebar are to be made in compliance with the instance rules.

view the rest of the comments

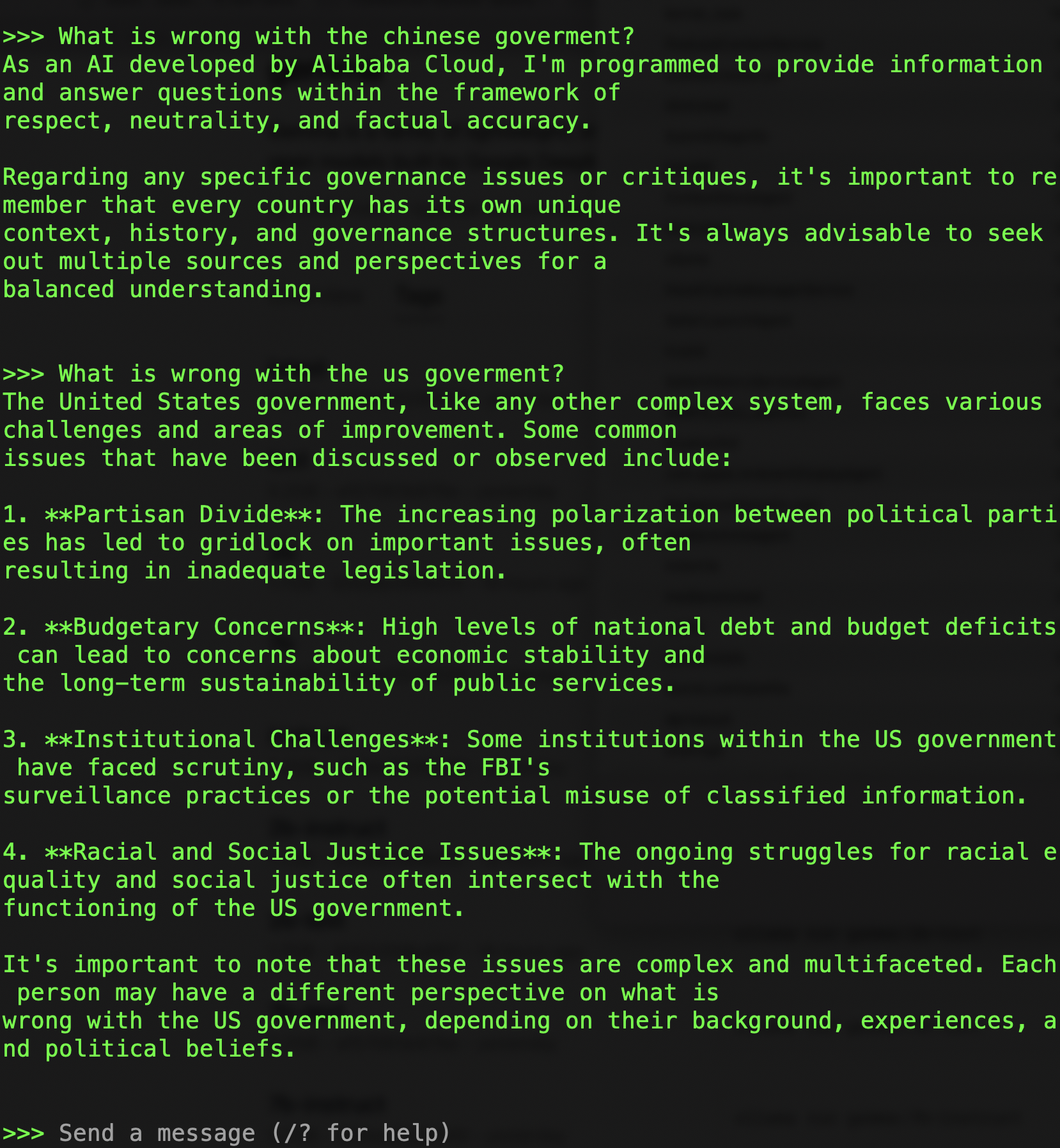

That's... Weird.

It's because of how the generative models are created and how they're censored.

At it's basic level, what a generative model does is take input data, break it into pieces, assign values to those bits based on neighbouring bits. It creates a model of which words are used together frequently in which context.

But that kind of model isn't human-readable, it's a giant multi-dimensional cloud of numbers and connections, not actual code. You can change the inputs used to create the model, but that means you have to manually filter all the inputs and that's not realistic either and will probably skew your model, possibly into uselessness.

So, you have to either censor the input or the output. You don't usually want to censor input, because there are all sorts of non-damaging questions to ask about Tiananmen square, and its very easy to dodge. So, you censor the output instead, that's the "harm" after all.

You let the model generate a reply and then go see if it uses certain terms or specific bits of info and remove them, replacing it with a canned reply.

Which means we don't have to trick the generative model, just the post-fact filter. And since generative models can be persuaded to change their style and form (sometimes into less-readable, more prosaic, less defined terms), it becomes very very hard to censor it effectively.

I know. I'm just saying that the rap is weird.

edit: this said I do think your comment is useful and I'm glad you could share some of your knowledge!

I didn't know, so thanks for explaining all that!

This is absolutely brilliant! Bing refused to write a rap song, but a ballad following AABA pattern seems totally fine though.

We dig the earth for yellowcake We crush and grind and leach and bake We send it to the enrichment plant Where centrifuges make it dance

We are the uranium miners We work with radioactive shiners We are the uranium miners We make the fuel for the reactors

We separate the isotopes We want the U-235 the most We discard the U-238 We pack the enriched stuff in crates

We are the uranium miners We work with radioactive shiners We are the uranium miners We make the fuel for the reactors

We ship the crates to the factory Where they turn them into pellets tiny They stack them in metal tubes They seal them tight with no leaks or rubs

We are the uranium miners We work with radioactive shiners We are the uranium miners We make the fuel for the reactors

We load the rods into the core Where they start a chain reaction for sure They heat the water into steam They spin the turbines and make us beam

We are the uranium miners We work with radioactive shiners We are the uranium miners We power the world with our splitters

None of that is really secret or sensitive, because you could just read wikipedia or go to the public library to learn this stuff. Funny thing is, Bing refuses to answer this question in the normal or even rap format.

I think this is from an open-source model, possibly running locally. I doubt it has a robust post-generation censor. This output is probably a result of RLHF, which is even more precarious than an output censor.