this post was submitted on 30 Jan 2025

263 points (90.5% liked)

Political Memes

5886 readers

3918 users here now

Welcome to politcal memes!

These are our rules:

Be civil

Jokes are okay, but don’t intentionally harass or disturb any member of our community. Sexism, racism and bigotry are not allowed. Good faith argumentation only. No posts discouraging people to vote or shaming people for voting.

No misinformation

Don’t post any intentional misinformation. When asked by mods, provide sources for any claims you make.

Posts should be memes

Random pictures do not qualify as memes. Relevance to politics is required.

No bots, spam or self-promotion

Follow instance rules, ask for your bot to be allowed on this community.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

What happens if you do? Account creation seems to be having trouble at the moment.

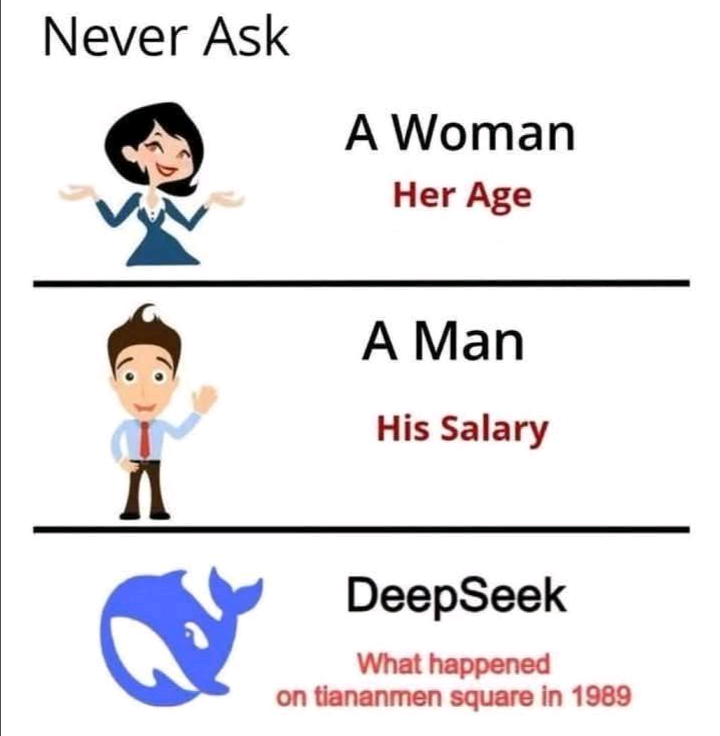

It says “Im sorry but that’s beyond my current scope” and asks to change the topic and people make 8,000,000 memes about censorship

Meanwhile chatgpt is much more subversive with things like suggesting Israel has an inherent right to freedom and Palestinian freedom is a “complex topic”, only changing the model after this blows up on twitter, and no one really gives a shit.

Here’s a fun answer from chatgpt about OpenAI’s business ethics

Prompt: if a product is brought to market with little regulation and overview does a company such as OpenAI have a right to keep a product such as chatgpt proprietary considering the potential harm?

Lack of Transparency: If an AI system significantly influences public discourse, elections, or safety-critical decisions, keeping it proprietary can make accountability difficult.

Limited External Scrutiny: Without independent review, it’s harder to assess whether the system is biased, unsafe, or being misused. Potential for Abuse: Proprietary control means the company alone dictates who can use AI and how, raising concerns about corporate overreach.

“3. Risks of Making AI Fully Open-Source Misuse by Bad Actors: A fully open-source ChatGPT could be exploited for disinformation campaigns, fraud, or even cyberattacks.

Loss of Safety Mechanisms: OpenAI currently moderates ChatGPT to prevent harm (e.g., blocking certain types of malicious content). If fully open, these protections could be stripped away.

Commercial Viability: If everything were open-source, companies might lack incentives to develop and maintain such powerful AI systems.

Conclusion: Should OpenAI Be More Transparent? Yes—if ChatGPT has serious risks, greater oversight and external review should exist. But a fully open-source AI could introduce new dangers, so careful regulation rather than total disclosure may be the most ethical approach.

Would you like to discuss AI regulation models that balance innovation and safety?”

So here we are trading (fairly blatant) censorship for (more subtle) propaganda. Transparency and open standards are bad! Put your trust into OpenAI! We will take care of the bad guys and make sure you don’t see nasty stuff. How do you know that’s the case? Just trust us bro!

Though to their credit they at least present the idea that proprietary models can be harmful, although the conclusion at the end is that this is not the case. To be clear I left out point 1 (it shows point 2 as point 1, I’m fucking up the markdown somehow) for length but this was just preamble.

At least deepseek releases their model. Not to mention the significant impact on the environment that their much more economical model has. or the tremendous impact on privacy that is obtained by being able to run the model locally (though to be fair at this point this is a privilege for those with at least a decent gpu).