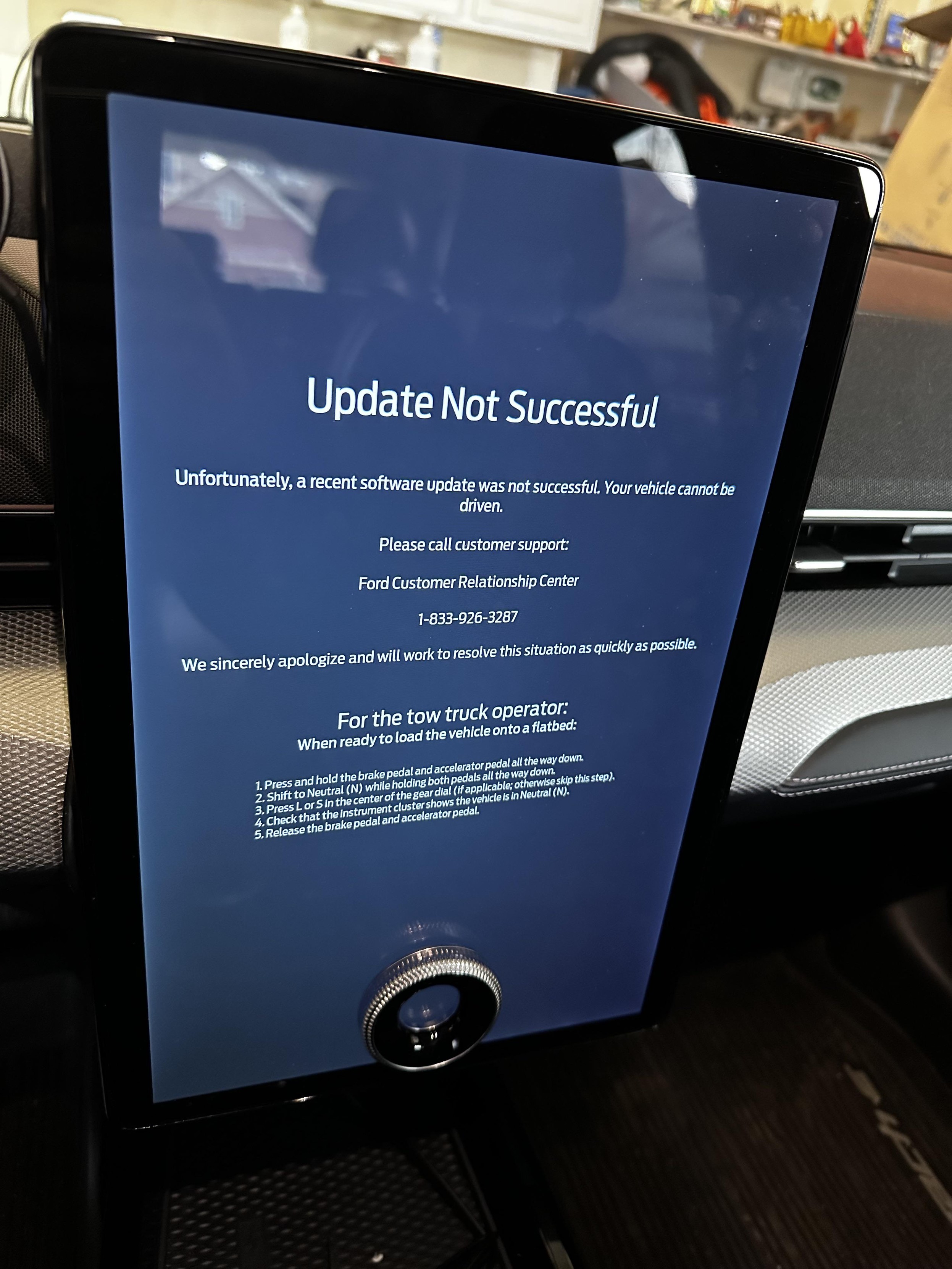

If your vehicle requires software validation to run and it cannot be overriden or fixed by yourself, it is not your vehicle. Don't throw away your money on this.

Late Stage Capitalism

This kind of shit is why I'm more into older tech. At least you actually own your stuff that way

The Sono Motors Sion shouldn't have failed, but unfortunately it did. They promised an open system and were fairly open during development.

Does newer tech just kinda suck?

I'm the millennial version of a tech illiterate, I have very basic coding skills in Java and that's it, but I've noticed that everything just gets worse as time goes on, and I want a second opinion:

- old webpages (like from the 2000s) are fast and snappy

- new webpages take much longer to load

- new smartphones get bricked easily. I've had 2 new phones get bricked, both my blackberry and my LG smartphone from 2005-2012 still work.

- discord is way less responsive than skype or AIM or IRC.

Everything new just seems more laggy and more prone to random catastrophic failure.

When I was young I actually didn't know what the BSOD was because I literally never experienced it. My first BSOD was in 2017 on Windows 8, even though I've been computing since 1998

The golden age for "normie" consumer computing definitely feels like it took place in the 2000s, and ended somewhere around 2014

Without giving anything specific away, I am a software developer and a consultant, and mostly work on web stuff.

I'll try to keep this short, but in general, yes. Basically, computers keep getting faster, which allows software developers to use higher-level libraries, which are actually less efficient, and thus your average piece of software actually takes more processing power and RAM than back in the day.

As well, because of those high-level libraries, programming is a lot easier than it used to be. Unfortunately, that means that we just hire cheaper developers that aren't as skilled, and they have a harder time tracking down and fixing bugs. Which is doubly worse because those higher-level libraries are black boxes, and you can't always fix things that arise inside of them easily.

But software development companies have basically figured out that shitty software doesn't really hurt their bottom line in the end. For the most part, people will use it if it's a name brand piece of software, like Google or Apple or Microsoft. They don't need to build high quality software because it's basically going to be used by default. The money will come in if you corner a market or if you build something unique, or contract with another business. It doesn't actually have to be high quality.

As well, websites make more money the more ads you put on them. So it doesn't matter how efficient you build it, it's going to be slow. And it doesn't matter how slow it is, because you're going to make more money the more ads and tracking you have. All you need is good search engine optimization and you will get traffic by default. SEO Is easier said than done, but the point is nobody really focuses on performance when it's more profitable to focus on search engines.

As well, because of those high-level libraries, programming is a lot easier than it used to be. Unfortunately, that means that we just hire cheaper developers that aren’t as skilled, and they have a harder time tracking down and fixing bugs. Which is doubly worse because those higher-level libraries are black boxes, and you can’t always fix things that arise inside of them easily.

The Luke Smith/ Mental Outlaw type chuds call these developers "soydevs".

Yeah, I'm not one to use insulting terms, it's more of a natural process of an industry lowering the bar to entry.

But there really is something to be said for those old applications that were built rock solid, even if they only came out with a new version once every four years.

More frequent releases of a smaller feature set isn't wrong. I'd be happy getting high quality application updates every month or so.

But as with all things, the analysis falls on the side that capitalism just doesn't incentivize the right things. Quarterly profit drives lots of features delivered poorly instead of a few good features delivered occasionally. Of course the developers get blamed for this when really they are just a product of a broken system. We invent insulting terms for them instead of going after the real problem, Because, of course, we don't have an understanding of materialism in the west.

Oh well.

Basically, computers keep getting faster, which allows software developers to use higher-level libraries, which are actually less efficient,

Could you elaborate on this?

So this means that an app that does basically the same thing today as in 2005 is going to be way more resource-intensive because of this right?

Yeah, this was a quick and dirty thought, but effectively that's exactly what I mean. An application built from scratch today using modern high-level programming libraries will take more RAM and more CPU to do the same thing than an app written in 2005 does, generally speaking.

Of course, for those people who still write C, C++, or choose to write Rust or Go, or some of the other low-level languages, or even Java, but without major frameworks, can still achieve the type of performance an app written in 2005 could. But for people coming out of college and/or code schools nowadays, you just reach for a big fat framework like spring or use a high level language like JavaScript or Python or Ruby with big frameworks, and your application will by default use more resources.

Though the application might still be fast enough, I'm not even saying that an application written in Python will be slow, but I will say that an application written in Python will by default use about 10x more CPU in RAM than a similar application written in Rust. I mean, maybe the application only uses 10 megabytes of RAM. When the equivalent efficient application would use 1 megabyte of RAM, both of those are very efficient and very fast and would be just fine. But when the difference is between 10 gigabytes of RAM and 1 gigabyte of RAM, yeah, at that point in time, you're pretty much just taking advantage of RAM being cheap.

And it's not even necessarily a bad thing that we do this. There's just a balance to be had. It's okay to write in higher level language if it means you can get some stuff done faster. But major applications nowadays choose to ship an entire browser to be the base layer of their Application. Just because it's more convenient to write cross-platform code that way. That's too much and there's already a lot of work going towards fixing this problem as well. We're just sort of seeing the worst of it right now.

I'm the millennial version of a tech illiterate, I have very basic coding skills in Java and that's it

Being able to code at all already places you in the 90th percentile for tech literacy. Many people don't even know what a file is.

When I was young I actually didn't know what the BSOD was because I literally never experienced it. My first BSOD was in 2017 on Windows 8, even though I've been computing since 1998

You've never gotten a BSOD on old versions of Windows?? My personal experience is that old versions of Windows (XP, 7) were much more unstable than new versions of Windows (10, 11).

The golden age for "normie" consumer computing definitely feels like it took place in the 2000s, and ended somewhere around 2014

Why would the golden age of "normie" consumer computing have taken place in the 2000s, when there were pop-up ads that gave you malware and adware toolbars?

The 25th percentile user today has literally never interacted with a hierarchical filesystem. They do not even know what a file is. The Apple mobile ecosystem is so locked down that it's actually impossible to accidentally install malware. I say that now is the golden age of "normie" consumer computing, because tech has never been easier.

I say that for normies, tech has never been better.

Also, the Linux desktop has never been better.

We have reached the point where you can pick any laptop off the shelf and have it work out of the box with Linux. This used to not be possible!

Linux gaming has never been better, now that we have Proton. Games that used to be Windows exclusives now run perfectly on Linux. Linux is now fully viable for video gamers.

GUI tools are now so good that you can use Linux without ever touching the command line.

While Windows may have become worse, Linux has never been better.

Also, laptops used to not last 14 hours on a single charge. Tech is better now.

The tech illiterate probably aren't going to be picking up linux, to be fair

No, definitely not. But Linux has progressed so far that it's now accessible to people who are terminal-shy.

You've never gotten a BSOD on old versions of Windows?? My personal experience is that old versions of Windows (XP, 7) were much more unstable than new versions of Windows (10, 11).

Correct. I never had BSOD and I used XP for thousands of hours in the early 2000s. Mostly runescape, halo trial, neopets, dozens of various flash game sites, etc.

I actually saw my friend have it a couple times and I remember thinking how exotic the solid blue screen looked

Why would the golden age of "normie" consumer computing have taken place in the 2000s, when there were pop-up ads that gave you malware and adware toolbars?

uhhh because you can x them out? I never got malware or adware toolbars installed on my stuff it felt like "it just werkz" back then, and now it doesn't anymore. I don't even do anything more complex now, it's just internet surfing and some steam games. And discord. Discord also feels extremely laggy, like when you click on something it takes a full split second to switch chatrooms

The 25th percentile user today has literally never interacted with a hierarchical filesystem.

This is only true for zoomers and boomers right?

Regardless of the windows stuff it extends to phones too. Smart-ish phones from before 2012 never gave me problems, while today's smartphones brick often and even while working, sometimes feel randomly laggy in a way the old phones never did. I have no idea what's going on but it feels like the software is just so built up and strung out that it's like a house of cards impromptu stuck together with superglue

- old webpages (like from the 2000s) are fast and snappy

- new webpages take much longer to load

Modern webpages are less like a page and more like a full blown application. If you're not careful you'll get an unoptimized mess, which is exacerbated when you put a bunch of ads on top.

That being said I don't have memories of everything being snappy 20 years ago - there were messy scripts and gigantic images that made Geocities and Angelfire sites near unusable back then as well.

That being said I don't have memories of everything being snappy 20 years ago - there were messy scripts and gigantic images that made Geocities and Angelfire sites near unusable back then as well.

Pages with dozens of embedded JPEG files that are larger than your monitor's resolution and are compressed at highest quality. Easily a quarter to half of a megabyte each and take several minutes to load on dialup, then the webserver times out the connection because you're taking too long to download all these giant files at once.

I don't miss those days. Not to say things are better now, but they necessarily weren't back then either.

Oh, and RealPlayer. Fuck RealPlayer.

Pages with dozens of embedded JPEG files that are larger than your monitor's resolution and are compressed at highest quality. Easily a quarter to half of a megabyte each and take several minutes to load on dialup

Sure but wasn't there a sweet spot in the late 2000s where this wasn't much of an issue

Also that's basically the thing that's happening with discord now too. Thousands of embedded JPGs, GIFs, WEBMs instead of just displaying the link that you click on to view it. The end result is a laggy piece of software

realplayer

Only used it a few times, what was so bad about it? Also what are your thoughts on Quicktime?

Not GP, but: Realplayer compressed everything to hell, the quality was absolutely atrocious. I believe it was buggy as well.

Quicktime was a behemoth that took ages to launch. To speed things up, it liked to auto-load and be active in the system tray, slowing system start down even further and taking up precious ram on the off chance that you might want to watch a quicktime video. It also liked to register itself as the video player of choice for other formats, because why would you use a decent player if you can use a shitty one that was made by Apple? Fuck quicktime.

That being said I don't have memories of everything being snappy 20 years ago - there were messy scripts and gigantic images that made Geocities and Angelfire sites near unusable back then as well.

I guess I wasn't really accessing Angelfire stuff until the late 2000s

Tech doesnt suck, its the software quality that sucks.

yea that's basically what I meant but I don't know enough to blame it all on software

for all I know the transistors might be gettin too small and these 7 nanometer thingies are buggin up the program more than the older 15 nm ones, or something idk

Shit, my mid-range motherboard has BIOS rollback functionality for cases like this. I'd expect the same out of a machine I might potentially have to, say, deliver a pregnant wife to the hospital in.

Or we could just not wed the computer to the car on this level.

I’d expect the same out of a machine I might potentially have to, say, deliver a pregnant wife to the hospital in.

lol first thing I thought of too. I've been in emergency situations where I needed to get to a hospital immediately and if my car did this I'd be fucking livid. As dangerous as motorcycles are at least I know that mine will never fail a software update or some shit, and if it doesn't start I can just bump start it to get it going and then figure out what's wrong later.

I'm no luddite but there's beauty in a machine that's designed to be as simple to operate and maintain as possible

Yeah, for something like a car I need a certain amount of reliability - We're looking at something that could potentially turn into a 20 year life cycle. (Never owned one less than ten years old at time of acquisition, anyhow)

Yeah, but reflashing a motherboard is far less dangerous than reflashing a $30,000 car. Your computer couldn’t kill someone if something fails. As much as I hate this image and wished repair instructions were made public, this may be the wisest move from a liability perspective.

If having an onboard computer makes it that dangerous, it shouldn't be on the road at all!

Lol and theyre gonna charge you a lot for towing and fixing 😂

And depending on what kind of update this is, it's a 15 minute reprogram.

This isn't even planned obsolescence this is just lazy balls grab.

The level of surveillance and control tech in modern cars is really concerning.

A tractor of my father made in People's Republic of Poland in 1964, operated very harshly and brutally, and stored outside, still works and cuts grass every summer. It literally needs no electricity - when pulled by other tractor for the engine start, you don't need a battery, thanks to diesel engine with sectional injection pump.

Lol the futures is jokes

Did they just... stick a dial onto a touchscreen? Can I buy such a dial separately?

Innovating new excuses not to go to work

I think i saw this first from here, but not sure if its orignal - https://mastodon.social/@danluu/111643026109666672

Why tf do they not have a rollback option? Even Windows has driver rollback and it doesn't even fuckin' drive a vehicle.