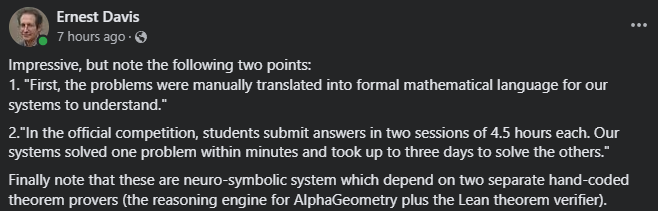

Also, choice sneer in the comments:

AlphaProof is more "AlphaZero doing self play against Lean" and less "Gemeni reading human proofs"

Also, choice sneer in the comments:

AlphaProof is more "AlphaZero doing self play against Lean" and less "Gemeni reading human proofs"

'manual translation' undoubtably doing some heavy lifting here

oh no i just learned something new about my wife

the wife sent this one to me

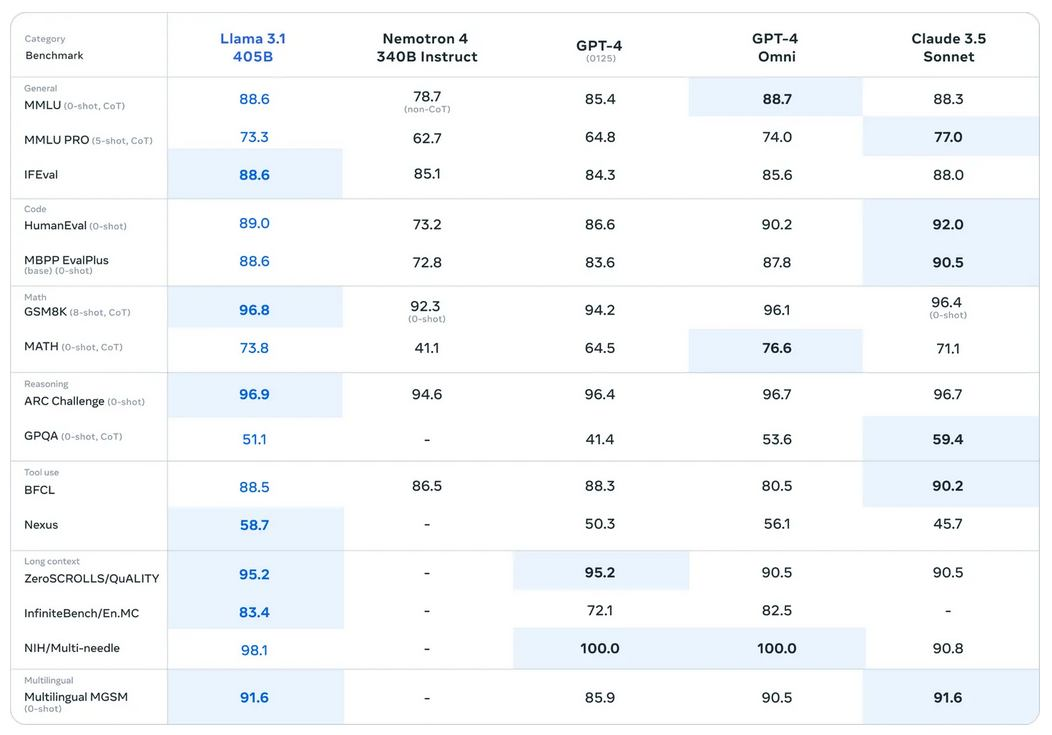

Azure/AWS/other cloud computing services that host these models are absolutely going to continue to make money hand over fist. But if the bottleneck is the infrastructure, then what's the point of paying an entire team of engineers 650K a year each to recreate a model that's qualitatively equivalent to an open-source model?

Yann and co. just dropped llama 3.1. Now there's an open source model on par with OAI and Anthropic, so who the hell is going to pay these nutjobs for access to their apis when people can get roughly the same quality for free without the risk of having to give your data to a 3rd party?

These chuckle fucks are cooked.

the removal of undesirable elements from society

Let me guess who gets to decide what qualifies as undesirable

Thanks for the info. I had never heard of this one before & didn't know I was shit posting on behalf of Thiel's ilk ;_;

Article summary:

A close look into the people and entities who helped draft and advocate for California’s controversial AI safety bill (SB 1047) reveals that one of the bill’s co-sponsors could have a significant conflict of interest Dan Hendrycks, an executive at Center for AI Safety — the firm whose lobbying arm helped draft the bill — co-founded a company called Gray Swan that offers AI safety compliance tools that seem positioned to provide the type of auditing data the bill would require; Gray Swan publicly launched on Tuesday The Center's lobbying arm was set up so it could help draft SB 1047 after state senator Scott Wiener approached the Center

Humans can’t beat AI at Go, aside from these exploits

kek, reminds me of when I was a wee one and I'd 0 to death chain grab someone in smash bros. The lads would cry and gnash their teeth about how I was only winning b.c. of exploits. My response? Just don't get grabbed. I'd advise "superhuman" Go systems to do the same. Don't want to get cheesed out of a W? Then don't use a strat that's easily countered by monkey brains. And as far as designing an adversarial system to find these 'exploits', who the hell cares? There's no magic barrier between internalized and externalized cognition.

Just get good bruv.

This is literally the dumbest shit I've read all week and it's been a pretty dumb week. I'm afraid I have to diagnose Roko with having the brain scamblies. There is no cure.

Morning lads, let's have a big week 💪