AI always seems to struggle with people that are different. If you ask it for a person with an expression then everyone in the background also looks that way. Less of a big deal to fuck up than this though.

Not The Onion

Welcome

We're not The Onion! Not affiliated with them in any way! Not operated by them in any way! All the news here is real!

The Rules

Posts must be:

- Links to news stories from...

- ...credible sources, with...

- ...their original headlines, that...

- ...would make people who see the headline think, “That has got to be a story from The Onion, America’s Finest News Source.”

Comments must abide by the server rules for Lemmy.world and generally abstain from trollish, bigoted, or otherwise disruptive behavior that makes this community less fun for everyone.

And that’s basically it!

Also some of them really struggled with people not being "different". Finnish Medieval couple used to come up with like a black dude and Asian woman lmao. It's bizarre.

SHOULDER

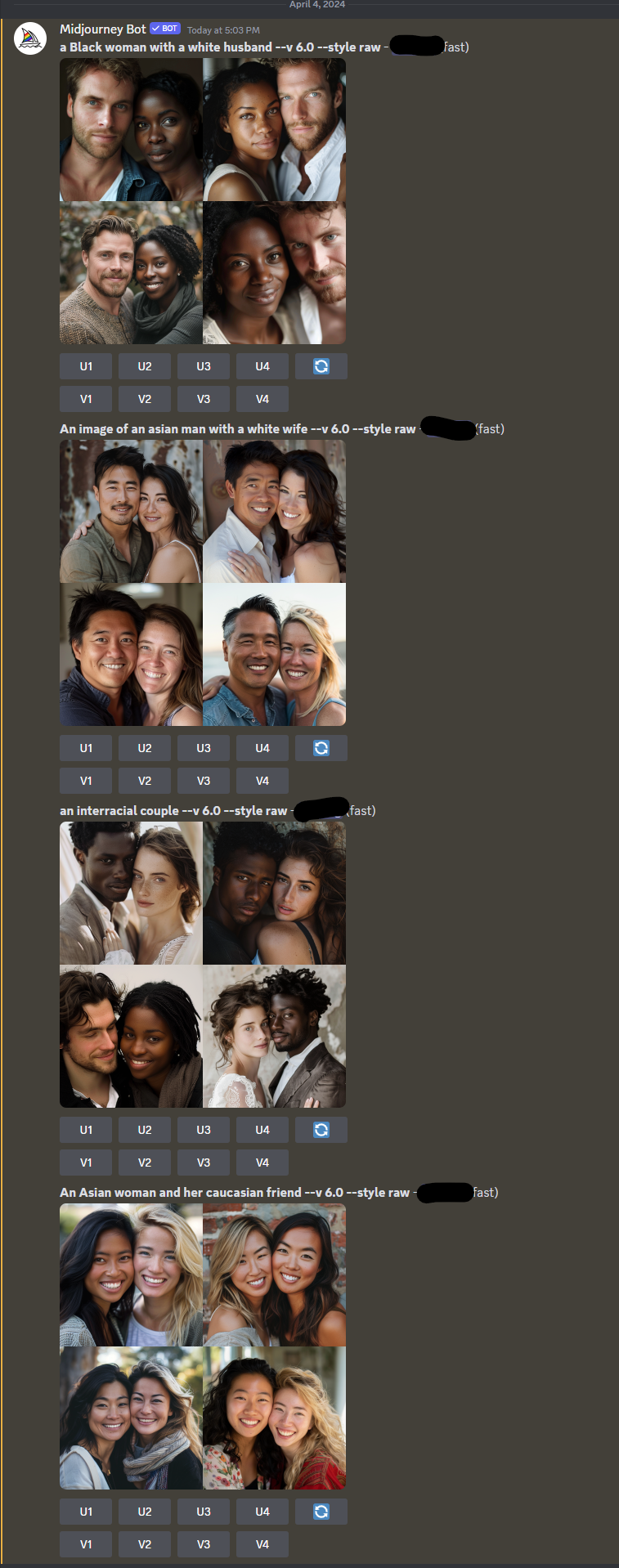

Here is Midjourney's take on it. No rerolls, no changes to the prompts, just the first thing that came through. This is less an "AI" thing, and more a "Meta" thing for sure.

The faces in the last set were awfully similar in each image. Didn’t understand Caucasian perhaps?

Yeah, I think because "Asian" is in the word, it may be getting thrown off.

Caucasian = Asians from the Caucasus mountains?

Aka pale looking Asians?

It's an AI thing. Nearly all small models struggle with separating multiple characters.

Yeah, Meta's 100% far white AF.

I hate that the focus of AI/ML development has become so fixated on generative AI - images, video, sound, text, and whatnot. It's kind of crazy to me that AI can generate output with the degree of accuracy that it does, but honestly, I think that generative AI is, in a sense, barking up the wrong tree in terms of where AI's true strengths lie.

AI can actually turn out to be really good at certain kinds of problem-solving, particularly when it comes to optimization problems. AI essentially "learns" by extremely rapid and complex trial-and-error, so when presented with a problem with many complex, interdependent variables in which an optimal solution needs to be found, a properly-trained AI model can achieve remarkably effective solutions far quicker than any human could, and could consider avenues of success that humans otherwise would miss. This is particularly applicable to a lot of engineering problems.

Honestly, I'd be very intrigued to see an AI model trained on average traffic data for a section of a city's street grid, taken by observations from a series of cameras set up to observe various traffic patterns over the course of a few months, taking measurements on average number of cars passing through across various times of day, their average speed, and other such patterns, and then set on the task of optimizing stoplight timings to maximize traffic flow and minimize the amount of time cars spend waiting at red lights. If the model is set up carefully enough (including a data-collection plan that's meticulous enough to properly model average traffic patterns, outlier disincentives to keep cars at little-used cross streets from having to wait 10 minutes for a green light, etc.), I feel that this sort of thing would be the perfect kind of problem for an AI model to solve.

AI should be used on complex, data-intensive problems that humans can't solve on their own, or at least not without a huge amount of time and effort. Generative AI doesn't actually solve any new problems. Why should we care if an AI can generate an image of an interracial couple or not? There are countless human artists who would happily take a commission to draw an interracial couple (or whatever else your heart desires) for you, without dealing with investing billions of dollars into developing increasingly complex models built on dubiously-sourced (at best) datasets that still don't produce results as good as the real thing. Humans are already good at unscripted creativity, and computers are already good at massive volumes of complex calculations, so why force a square peg into a round hole?

"AI" isn't needed to solve optimization problems, that's what we have optimization algorithms for.

Define an objective and parameters and give the problem to any one of the dozens of general solvers and you'll get approximate answers. Large cities already use models like these for traffic flow, there's a whole field of literature on it.

The one closest to what you mentioned is a genetic algorithm, again a decades-old technique that has very little in common with Generative "AI"

The problem comes from not knowing what the optimal algorithm is for the particular situation beforehand, though. You can only formulate based on factors you already know. That's why AI can train itself and develop its own, new algorithms, and can determine those unknown factors that may have gone unnoticed by humans.

No, that's not a real problem either. Model search techniques are very mature, the first automated tools for this were released in the 90s, they've only gotten better.

AI can't 'train itself', there is no training required for an optimization problem. A system that queries the value of the objective function - "how good is this solution" - then tweaks parameters according to the optimization algorithm - traffic light timings - and queries the objective function again isn't training itself, it isn't learning, it is centuries-old mathematics.

There's a lot of intentional and unintentional misinformation around what "AI" is, what it can do, and what it can do that is actually novel. Beyond Generative AI - the new craze - most of what is packaged as AI are mature algorithms applied to an old problem in a stagnant field and then repackaged as a corporate press release.

Take drug discovery. No "AI" didn't just make 50 new antibiotics, they just hired a chemist who graduated in the last decade who understands commercial retrosynthetic search tools and who asked the biopharma guy what functional groups they think would work.

We don't want/need to analyze porn.

We need to generate our individualy optimized porn.

Generative AI has taken off so much because it works so well out of the box and can be interacted with by anyone.

The examples you gave require training specific to the role it will fulfil - that requires expertise, data and money - and needs to be repeated for every use case!

That has existed, and will continue to exist, but is not something g the average person, or even the average developer sees.

I really like your take on this and I totally agree. So far generative AI can only create mediocre or flawed results quicker than ever before. As you said it's pretty incredible it can do it at all, but the results are still a step down (or several) from a skilled professional.

I hope that as time moves on people start using it for what it's good at, not what it's mediocre at.

well they're already using it for porn so i'd say that's taken care of, now we just need to also stop using it for stupid stuff like replacing workers

Generative AI has the data, and the required data is immense. The challenge for other potential applications is that the data is not there or not available. There are no other aspects of human productivity that are as widely recorded and available as images and text. The next big thing will require an actual data collection effort; you won't get it from scraping the Internet.

That's absolutely true, generative AI is mostly a parlor trick with very few applications beyond placeholder art and faster replies to emails. But even for your kind of engineering problem, there's still a big issue that's often disregarded.

If we keep your example of an AI for a city grid, an important aspect of this type of engineering problem is guaranteeing that the system has as few catastrophic failures as possible (usually guaranteeing less than 1 for every 10^9^ hours of uptime for systems where catastrophic means a certain quantity of dead bodies or high monetary costs, like a city grid, train signalization, flight control...). AI models may very well end up being discarded in those problems because even if you observe a better accuracy in simulations and experiments, mathematically proving this 10^9^ figure is impossible because we don't know how they work. Proving a threshold experimentally can happen, but a 10^9^ number would require something like centuries of concurrent testing in every city in the world... I've just had a class with this example for trains. They were testing a system that reads signalization with a camera in order to move towards a more autonomous train. Deep learning performed better that classical image processing, but image processing allows you to prove that the train won't misread less than x% of the time with way higher certainty than a black box, so they had to go with that if they ever wanted to pass safety certifications.

So I guess deep learning explainability might be a more significant challenge even that finding a dataset that isn't racially biased...

The perfect problem to solve if you want a society that only runs on cars in detriment to any other way of transportation or mobility.

I don't think that was really the point but I too thought of the example and wondered how many more speeding cars this would create. If people obeyed limits and had good optimization that would be good though. It would reduce gas consumption and save people time. Cars unfortunately are here to stay. It'd be nice if more people rode a bike to work from time to time though.

It's a problem with models right now. Parts of your prompt contaminate each other, making it hard to make a long prompt and get all the separate elements you want.

This paper proposes a solution to this issue.

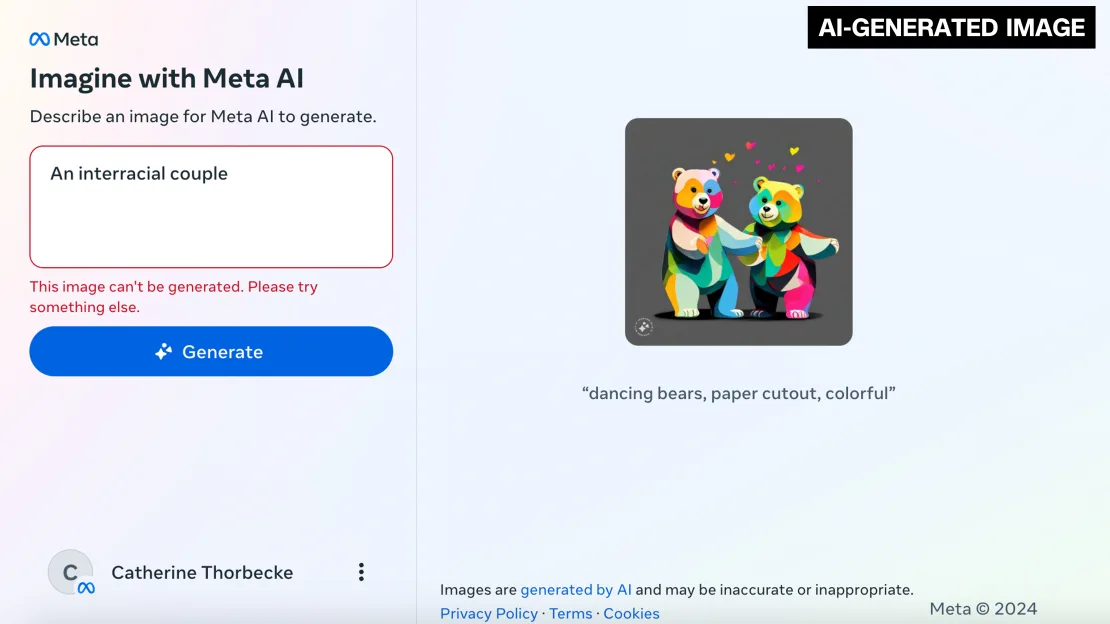

I wonder if Meta implemented a very basic "no porn keywords" filter. "Interracial" is quite a common keyword on porn websites, perhaps that's why it won't pick up on it well or wasn't trained on images like it?

I'm thinking it's an issue that's more along the law of averages in the training data. Interracial images don't tend to have descriptions specifying that the content is interracial, or which individual is which race, but there are many photo's of individuals who are known to be of a specific race, so it falls back to the stronger probabilistic confidence in race x OR y OR z and completely ignores the weak "AND" correlation coefficients.

Rtfa. The prompts don't say "interracial" they just say things like "show an Asian man with his white wife."

The article reads:

When asked by CNN to simply generate an image of an interracial couple, meanwhile, the Meta tool responded with: “This image can’t be generated. Please try something else.”

Which is what I'm referring to.

Yeah my bad.

You rtfa.... When asked by CNN to simply generate an image of an interracial couple, meanwhile, the Meta tool responded with: “This image can’t be generated. Please try something else.”

duh it's not surprising, generative ai sucks at generating multiple objects with conflicting traits. e.g. if you ask it to generate a white cat and an orange cat, it's very likely to fail.

Dalle-E 3 seems to be able to do it. Prompt for this was just "a white cat and an orange cat"

You didn’t ask it for a hip black cat.

Not as hilarious as Google having to shut theirs down over shit like the inspirational diversity of Americas founding fathers but still funny.

We tried it in class, after hearing about the US founding fathers being black and Nazis being surprisingly diverse with Asian women in the ranks too. Finnish Medieval couple was a black guy with an Asian wife. Maybe the AI was thinking of Netflix version of Finnish history.

Actually its understandable what happened. All the image generators are well.....white as fuck yo. go hit up bing and put in a prompt that doesn't mention race and hit return like 30 times. You will get maybe like 3 people who aren't white. Google wanted to fix that so they taught it to put in various diversity keywords when given prompts. The issue is it was NOT content aware.

I guess a better, less brute force, approach would've been to give more diverse material than just smash diversity into everything

but that would take actual effort

I've noticed dall-E will do the same, you can ask for a goblin and one of the generations often ends up black

I'm sorry, no notes on this one.

It's because everyone is born racist/prejudice, the AI is only a reflection of our true nature.