this post was submitted on 14 Dec 2023

1018 points (99.2% liked)

xkcd

8973 readers

160 users here now

A community for a webcomic of romance, sarcasm, math, and language.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

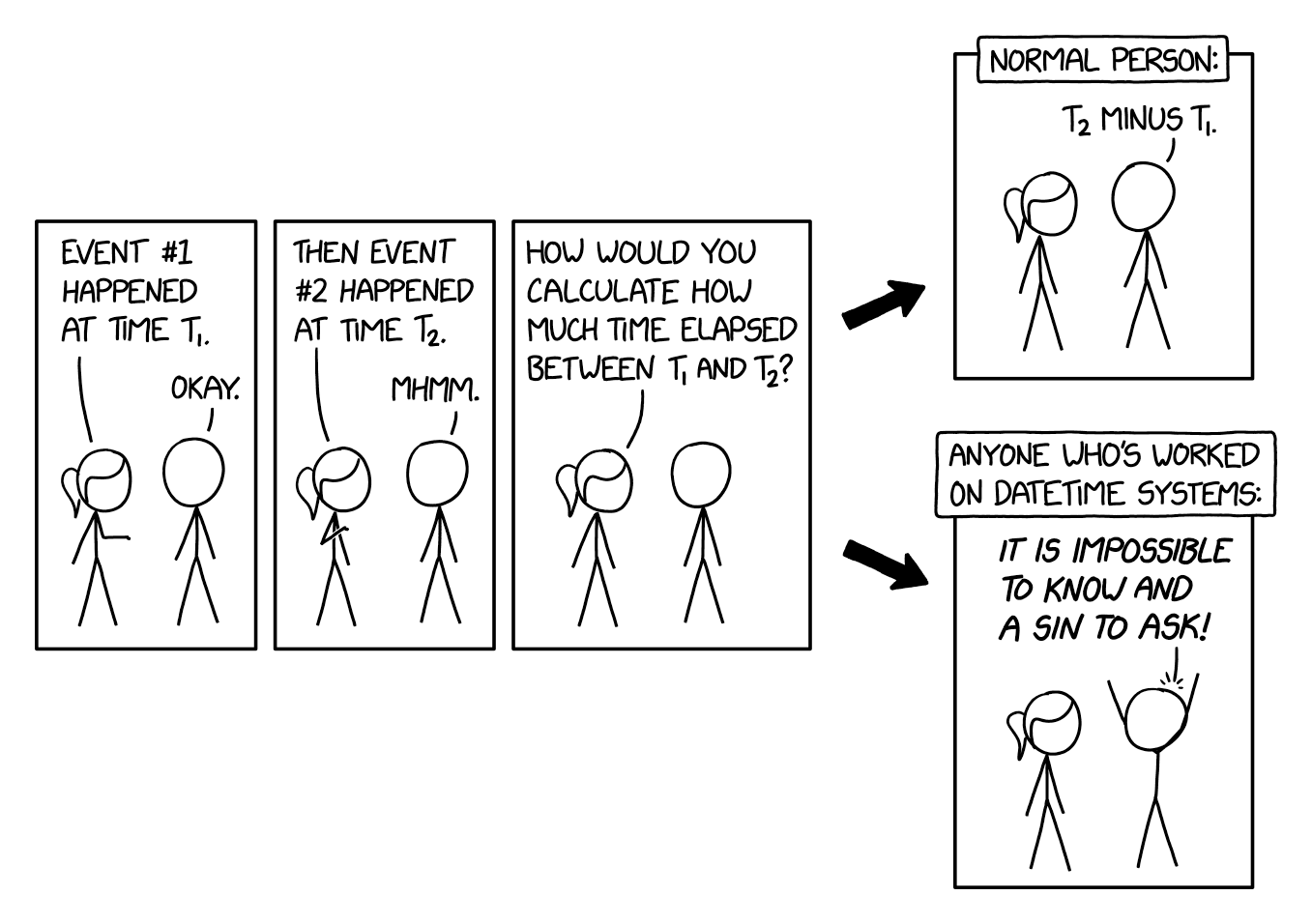

I just spent two days debugging a reporting endpoint that takes in two MM-YYYY parameters and tries to pull info between the first day of the month for param1 and the last day of the month for param2 and ended up having to set my date boundaries as

LocalDate startDate = new LocalDate(1, param1.getMonth(), param2.getYear()); //pretty straightforward, right?

//bump month by one, account for rollover, set endDate to the first of that month, then subtract one day

int endMonth = param2.month == 12 ? param2.month + 1 : 1;

LocalDate endDate = new LocalDate(1, endMonth, param2.year).minusDays(1);

This is extraordinarily simply for humans to understand intuitively, but to code it requires accounting for a bunch of backward edge/corner case garbage. The answer, of course, is to train humans to think in Unix epoch time.

Using YearMonth.atEndOfMonth would have been the easier choice there, I think

holy shit, yeah it would have. tyvm, I'll be putting in a PR first thing monday!

Would you mind trying to explain (ELI5 style) what you did before and why you are excited for this new method for those of us who dont understand code?

it does in a way that's been reviewed, vetted and tested by a lot of people the thing that I'm trying to do with code that's only ever been seen by me and one other guy and has been tested to this best of my ability, which i hope is quite good but one person can easily miss edge cases and weird coincidences.

Tried and tested, now gotta brush up those searching skills and use llm to get your work done quicker

So how is the new thing different/better (other than less lines i guess?) If you dont me asking

it's simpler and a lot easier for another engineer to look at and understand later, so they can verify that it's right or change it if it's wrong or we decide to do something a little bit different. it's also been reviewed and tested by a lot of people working in a lot of cases that are all a little bit different from one another, so the odds that their code is correct are better than the odds that my code is correct, all other things being equal

It's easier to understand, easier to review for correctness, and less likely to cause problems with additional changes in the future. Even though it sounds counterintuitive, software developers generally try to write as little code as possible. Any code you write is a potential liability that has to be maintained, so if you can instead just call code that others have already written and that has been tested, you'll want to do that. (Note that "less code" doesn't mean fewer lines of code, it means less logical complexity, which is often, but not always, also less in terms of characters/lines)

So like my english teacher taught me. Keep It Stupid Simple(though he would say keep it simple stupid to some people in class i am just realizing now 20+ years later)

Exactly that! KISS is an often cited rule among software devs.

To break it down a bit further, the code that was provided is specifically trying to get the last day of a month, which I'll call Month X since it will vary. The code is doing these things, in this order:

All this to get the last day of the month from Month X. The reason they did it this way is so they didn't have to say "Is this February? Then get day 28. Is this January/March/etc? then get day 31." and so on.

The code that the other user provided will instead get the last day of Month X without having to do all those steps. It's doing something in the background to get the same data, but the coder doesn't have to worry about exactly how because they can trust it will work as expected.

It ultimately boils down to the user carving out a round piece of wood, fitting it on an axle and bolting it on, then to find someone already has cheap wheels for sale that are more stable than what they just made.

Thanks!

You're welcome! A big part of coding is finding how other people solved the problems you're solving and finding how to incorporate their work into yours.

In the example you gave, wouldn't the year be off by one when

param2.monthis 12?I was transcribing it from memory and that exact problem cost me like two hours when I was writing it the first time. Well spotted, now write me a unit test for that case.

Y'know, I legitimately said to myself "I bet they were writing that from memory and just forgot the edge case. I wonder if that was a problem when doing it originally?" before I wrote that comment. 😂 Time to get some Spock tests set up!

Unix epoch time in UTC, making sure that your local offset and drift are current at the time of conversion to UTC...

i don't even care if its wrong, I just want the code to be readable.

You should care if it's wrong.

at the resolution of clock drift in milliseconds when I'm running reports that are, at most, only specific to the day?

Clock drift? No. Time zones? Probably.

not really time zones either outside the edge case where a data point exists within delta of midnight so that the time zone drift would result in a date change

Time zones change. Relative times without time zones don't make sense.

All dates and times shall be stored and manipulated in Unix time. Only convert to a readable format at the top of the UI, and forget trying to parse user inputs :P that's just impossible

I picture this being read by the fred armisen "believe it or not, straight to jail" character

Unix epoch time is wrong too, as it doesn't include leap seconds, meaning your time difference will be off by up to 15 seconds.