this post was submitted on 14 Dec 2023

1018 points (99.2% liked)

xkcd

8973 readers

160 users here now

A community for a webcomic of romance, sarcasm, math, and language.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

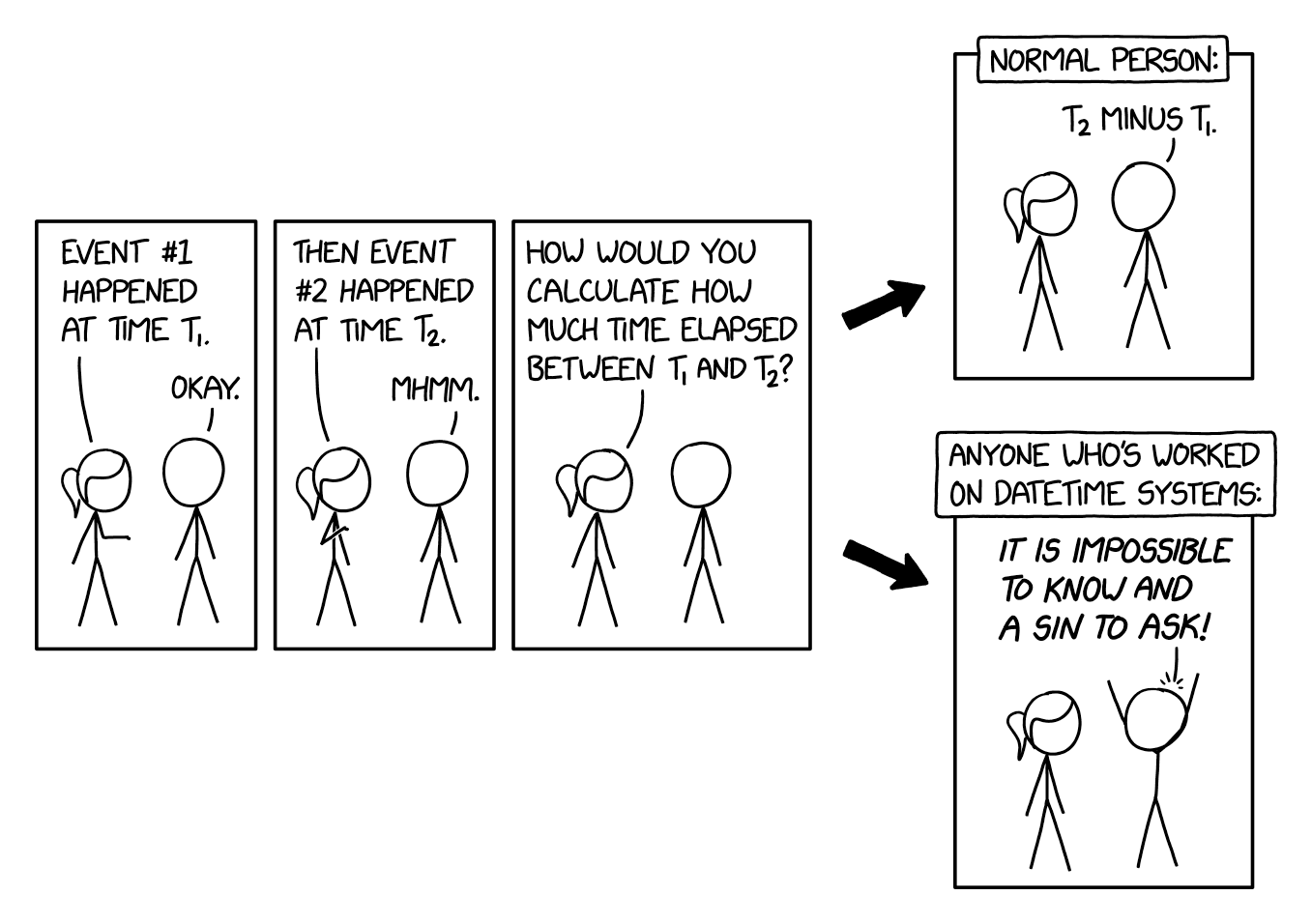

This is why we should just move to a universal time zone and stop with the day light savings.

We have that, it's called Unix time, and the only thing it doesn't account for is time dilation due to relativity.

it's perfect

If your system hasn’t been upgraded to 64-bit types by 2038, you’d deserve your overflow bug

Let's just nake it 128-Bit so it's not our problem anymore.

Hell, let's make it 256-Bit because it sounds like AES256

64 bits is already enough not to overflow for 292 billion years. That’s 21 times longer than the estimated age of the universe.

If you want one-second resolution, sure. If you want nanoseconds a 64-bit signed integer only gets you 292 years. With 128-bit integers you can get a range of over 5 billion years at zeptosecond (10^-21 second) resolution, which should be good enough for anyone. Because who doesn't need to precisely distinguish times one zeptosecond apart five billion years from now‽

If you run a realistic physical simulation of a star, and you include every subatomic particle in it, you’re going to have to use very small time increments. Computers can’t handle anywhere near that many particles yet, but mark my words, physicists of the future are going want to run this simulation as soon as we have the computer to do it. Also, the simulation should predict events billions of years in the future, so you may need to build a new time tracking system to handle that.

Good point. You'd need at least 215 bits to represent all measurably distinct times (in multiples of the Planck time, approximately 10^-43 seconds) out to the projected heat death of the universe at 100 trillion (10^14) years. That should be sufficient for even the most detailed and lengthy simulation.

With a 128 bit integer you can represent 340 undecillion (or sextillion if you use the long scale notation) seconds, which is equivalent to 10 nonillion (or quintillion, long scale) years. The universe will long have have stopped being able to support life by then because stars stopped forming (enough time would have passed it could have happened a hundred quadrillion (a hundred thousand billion, long form) times over assuming we start counting from the birth of the universe).

Cries in vintage computer collection tears.

You are a monster phoneymouse

I love the word "Epochalypse", from the wiki page you linked

I thought that's what datetime was based off of, tbh.

I know, but it's not standard anywhere in the world.

Give it a few more decades

Swatch's Internet Beats are making more and sense every time Daylight Savings forces a timezones change. Why are we still using base 12 for time anyway?