this post was submitted on 10 Dec 2024

181 points (98.9% liked)

chapotraphouse

13594 readers

609 users here now

Banned? DM Wmill to appeal.

No anti-nautilism posts. See: Eco-fascism Primer

Slop posts go in c/slop. Don't post low-hanging fruit here.

founded 4 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

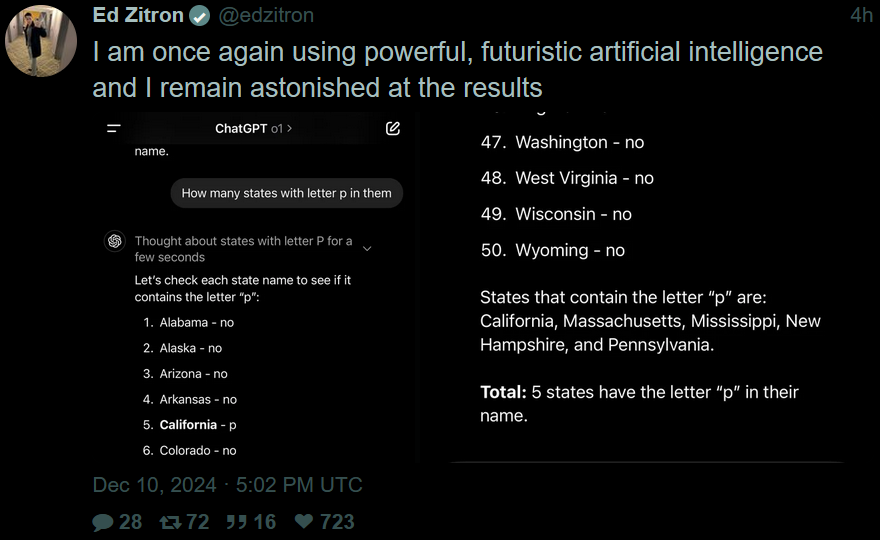

This fuckgin stupid quantum computer can't even solve math problems my classical von Neumann architecture computer can solve! Hahahah, this PROVES computers will never be smart. Only I am smart! The computer doesn't even possess a fraction of my knowledge of anime!!

LLMs are categorically not AI, they're overgrown text parsers based on predicting text. They do not store knowledge, they do not acquire knowledge, they're basically just that little bit of speech processing that your brain does to help you read and parse text better, but massively overgrown and bloated in an attempt to make that also function as a mimicry of general knowledge. That's why they hallucinate and are constantly wrong about anything that's not a rote answer from their training data: because they do not actually have any sort of thinking bits or mental model or memory, they're just predicting text based on a big text log and their prompts.

They're vaguely interesting toys, though not for how ludicrously expensive they are to actually operate, but they represent a fundamentally wrong approach that's receiving an obscene amount of resources to trying to make it not suck without any real results to show for it. The sorts of math and processing involved in how they work internally have broader potential, but these narrowly focused chatbots suck and are a dead end.

These models absolutely encode knowledge in their weights. One would really be showing their lack of understanding about how these systems work to suggest otherwise.

Except they don't, definitionally. Some facts get tangled up in them and can consistently be regurgitated, but they fundamentally do not learn or model them. They no more have "knowledge" than image generating models do, even if the image generators can correctly produce specific anime characters with semi-accurate details.

"Facts get tangled up in them". lol Thanks for conceding my point.

I am begging you to raise your standard of what cognition or knowledge is above your phone's text prediction lmao

Don't be fatuous. See my other comment here: https://hexbear.net/comment/5726976