this post was submitted on 25 Jul 2023

665 points (99.3% liked)

Programming

17832 readers

176 users here now

Welcome to the main community in programming.dev! Feel free to post anything relating to programming here!

Cross posting is strongly encouraged in the instance. If you feel your post or another person's post makes sense in another community cross post into it.

Hope you enjoy the instance!

Rules

Rules

- Follow the programming.dev instance rules

- Keep content related to programming in some way

- If you're posting long videos try to add in some form of tldr for those who don't want to watch videos

Wormhole

Follow the wormhole through a path of communities !webdev@programming.dev

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

We're not able to properly define general intelligence, let alone build something that qualifies as intelligent.

I can think of four aspects needed to emulate human response: basic knowledge on various topics, logical reasoning, contextual memory, and ability to communicate; and ChatGPT seems to possess all four to a certain degree.

Regardless of what you think is or isn't intelligent, for programming help you just need something to go through tons of text and present the information most likely to help you, maybe modify it a little to fit your context. That doesn't sound too far fetched considering what we have today and how much information are available on the internet

LLM's cannot reason, nor can they communicate. They can give the illusion of doing so, and that's if they have enough data in the domain you're prompting them with. Try to go into topics that aren't as popular on the internet, the illusion breaks down pretty quickly. This isn't "we're not there yet", it's a fundamental limitation of the technology. LLM's are designed to mimick the style of a human response, they don't have any logical capabilities.

You're the one who brought up general intelligence not me, but to respond to your point: The problem is that people had an incentive to contribute that text, and it wasn't necessarily monetary. Whether it was for internet points or just building a reputation, people got something in return for their time. With LLM's, that incentive is gone, because no matter what they contribute it's going to be fed to a model that won't attribute those contributions back to them.

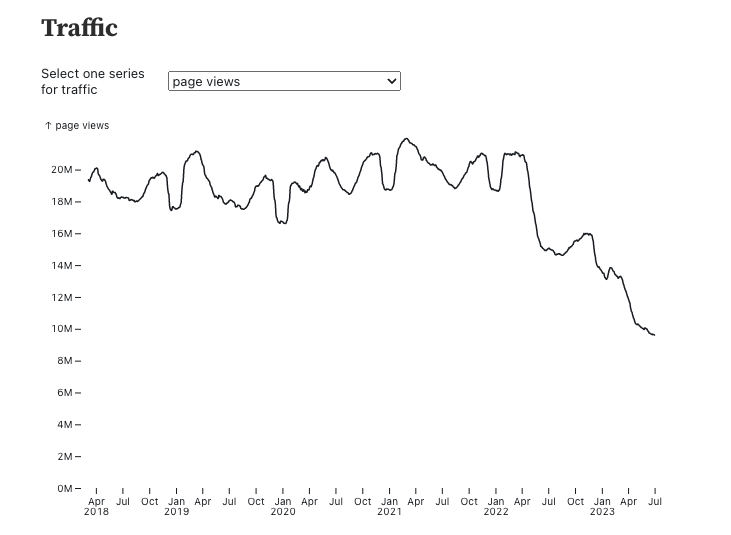

Today LLM's are impressive because they use information that was contributed by millions of people. The more people rely on ChatGPT, the less information will be available to train it on, and the less impressive these models are going to be over time.