Which is worse, this or the C2PA specification?

Piracy: ꜱᴀɪʟ ᴛʜᴇ ʜɪɢʜ ꜱᴇᴀꜱ

⚓ Dedicated to the discussion of digital piracy, including ethical problems and legal advancements.

Rules • Full Version

1. Posts must be related to the discussion of digital piracy

2. Don't request invites, trade, sell, or self-promote

3. Don't request or link to specific pirated titles, including DMs

4. Don't submit low-quality posts, be entitled, or harass others

Loot, Pillage, & Plunder

📜 c/Piracy Wiki (Community Edition):

💰 Please help cover server costs.

|

|

|---|---|

| Ko-fi | Liberapay |

Is it already out? I've run into a lot of errors the past week since I started using Rethink DNS. Only on a couple sites, particularly if I do a dumb and click a Google sponsored result.

Looks like we will have to switch to web3 for good

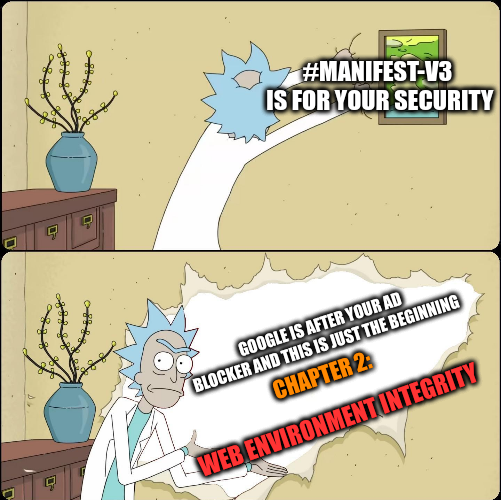

web env. integrity is not as bad as people make it out to be.

yeah I absolutely agree that it's terrible and also a bad idea (we don't need MORE drm in our browsers, I'm looking at you, Widevine (although firefox worked around it by running drm in an isolated container)), but it's main purpose is to detect automated requests and effectively block web scraping with a drm system (it ensures two things: your useragent can be trusted and you're a real non-automated user), NOT detect ad blockers. It doesn't prevent web pages from being modified like some people are saying.

there's a lot of misleading information about the api as it doesn't "verify integrity" of the web page/DOM itself.

it works by creating a token that a server can verify, for example when a user creates a new post. If the token is invalid, server may reject your attempt to do an action you're trying to perform. (this will probably just lead to a forced captcha in browsers that don't support it...)

Also, here's a solution: Just don't use Chrome or any Chromium-based browsers.

There is already a manifest v3 compatible version of ublock origin so can someone explain to me how it gonna end ad blockers?