this post was submitted on 18 Oct 2024

165 points (100.0% liked)

technology

23332 readers

123 users here now

On the road to fully automated luxury gay space communism.

Spreading Linux propaganda since 2020

- Ways to run Microsoft/Adobe and more on Linux

- The Ultimate FOSS Guide For Android

- Great libre software on Windows

- Hey you, the lib still using Chrome. Read this post!

Rules:

- 1. Obviously abide by the sitewide code of conduct. Bigotry will be met with an immediate ban

- 2. This community is about technology. Offtopic is permitted as long as it is kept in the comment sections

- 3. Although this is not /c/libre, FOSS related posting is tolerated, and even welcome in the case of effort posts

- 4. We believe technology should be liberating. As such, avoid promoting proprietary and/or bourgeois technology

- 5. Explanatory posts to correct the potential mistakes a comrade made in a post of their own are allowed, as long as they remain respectful

- 6. No crypto (Bitcoin, NFT, etc.) speculation, unless it is purely informative and not too cringe

- 7. Absolutely no tech bro shit. If you have a good opinion of Silicon Valley billionaires please manifest yourself so we can ban you.

founded 4 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

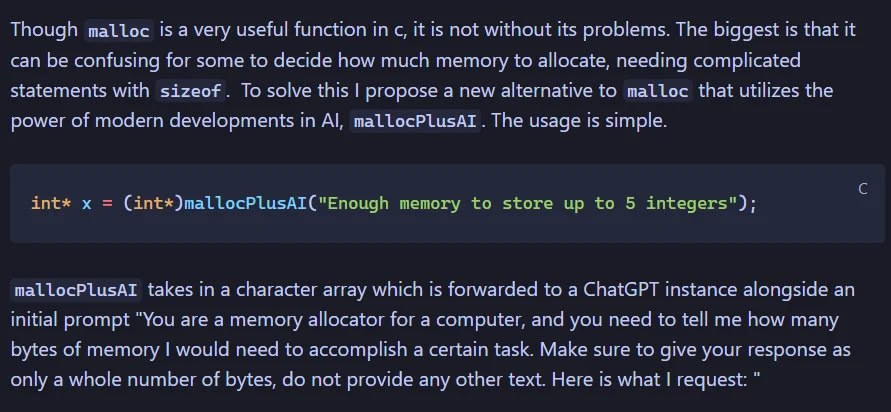

I'm pretty sure some of the newer ChatGPT-like products (the consumer-facing interface, not the raw LLM) do in fact do this. They try to detect certain types of inputs (i.e. math problems or requesting the current weather) and convert it to an API request to some other service and return the result instead of a LLM output. Frankly it comes across to me as an attempt to make the "AI" seem smarter than it really is by covering up its weaknesses.

I think chatgpt passes mathematical input to Wolfram alpha

Yeah, Siri has been capable of doing that for a long time, but my actual hope would be that moreso than handing the user the API response, the LLM could actually keep operating on that response and do more with it, composing several API calls. But that's probably prohibitively expensive to train since you'd have to do it billions of times to get the plagiarism machine to learn how to delegate work to an API properly.