this post was submitted on 19 Aug 2024

1014 points (95.9% liked)

Funny: Home of the Haha

5776 readers

751 users here now

Welcome to /c/funny, a place for all your humorous and amusing content.

Looking for mods! Send an application to Stamets!

Our Rules:

-

Keep it civil. We're all people here. Be respectful to one another.

-

No sexism, racism, homophobia, transphobia or any other flavor of bigotry. I should not need to explain this one.

-

Try not to repost anything posted within the past month. Beyond that, go for it. Not everyone is on every site all the time.

Other Communities:

-

/c/TenForward@lemmy.world - Star Trek chat, memes and shitposts

-

/c/Memes@lemmy.world - General memes

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

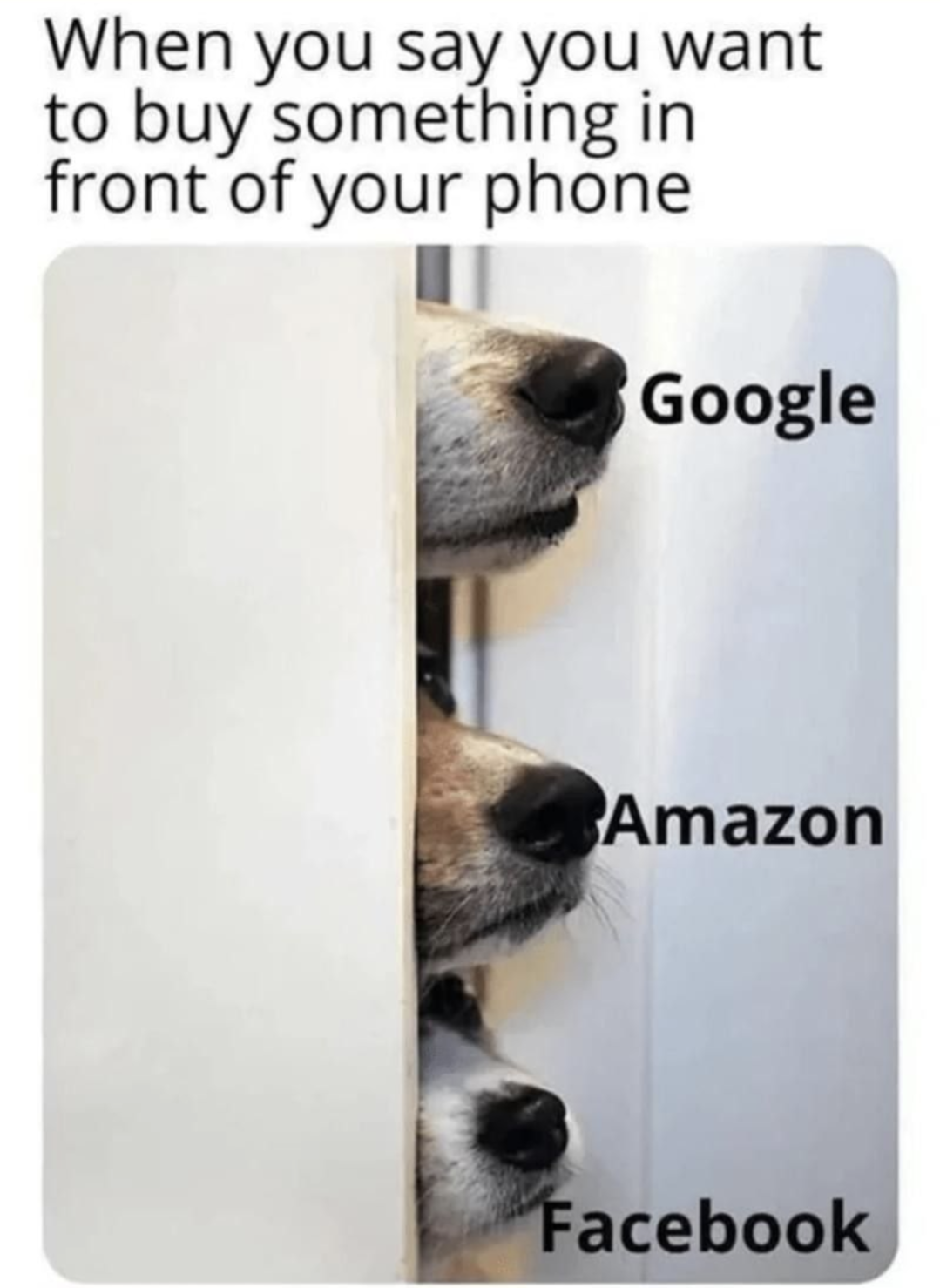

Well, I'd argue that you can't change "OK Google" because that's a great form of advertising. I've even seen movies where they use "Hey Siri" or "Alexa" as a product placement.

For sure.

That doesn't mean they don't “accidentally” record completely irrelevant conversations.

And that also doesn't mean that what it does record isn't being aggregated so you can be marketed to.

There have been plenty of tests where CPU and network usage were monitored with one phone.

Once in a quiet soundproof room compared to sitting next to a conversation.

Zero difference.

Recording and parsing audio would kill your battery. And it's not necessary when most people freely provide their data when using Facebook, Instagram, TikTok, ..

There has been zero proof about illegal recording, even though it would be easy to find.

Perhaps we're misunderstanding something.

It's a fact that plenty of devices have assistant software running 24/7, with an open mic. We can agree that the key phrase is detected locally via some low-power chip or something similar.

I'm saying that these virtual assistants are capturing and saving recordings, even when they aren't explicit commands. Those recordings can then be used to further profile a user.

Mozilla even says that Amazon claims that they can delete recordings, but will continue to use data collected by the user from those recordings, despite that. This is a problem, IMO, and it can certainly explain many of these coincidences that people are witnessing.

Except that Amazon has had to pay out $25 million for keeping kid's recordings.

And the State of Texas has sued Google for illegally collecting voice-data.

California has also certified several class-action lawsuits against google for illegally recording and using conversations without consent.

Or that Apple was caught secretly recording voice conversations, even when the user opted-out.. Apple claimed this was a "bug". LOL

There are so many cases like this, that we know of. I can't imagine how many of these privacy nightmare we haven't been made aware of.

One is a bug, one is just a lawsuit that went nowhere, one is just an accusation (Google did pay a fine, but for geolocation tracking, not voice), the Amazon one is pretty bad, but again it's not for a phone!!!

Yes, if your phone assistant accidentally activates then your voice might be uploaded without you knowing. That's a fact. But you agreed to that by enabling the voice assistant (it even warns you about this).

If you switch your voice assistant off (I have) then you don't have this issue. What is so difficult to understand here?

The low powered chips really just listen to a few syllables, they can easily have false positives. That's just a technical aspect of it.

So just a thought, if they are looking for highly optimized keywords that can be done locally what's to stop them from adding common keywords for advertising.

In the given anecdote about babies and diapers, you would literally just need a baby keyword. It gets triggered phone tells the Mothership it heard about babies, suddenly diaper ads. It wasn't listening to every single word, it wasn't parsing the sentence, it was just looking for highly optimized ad keywords. You could even set a threshold for how often certain add keywords or triggered to avoid false positives on detection

It's not listening to actual words, that's already too complex (you'd have to parse language for that, which those low power chips can't do). It's listening for syllables, Oh-Kay-Goo-Gle or whatever. Depends on the chip and implementation of course, which is also why you get false positives when someone says something similar.

If you add more syllables to that then your phone would activate literally all the time, with tons of false positives.

Seriously, if we had low powered voice recording + voice to text you'd already have instant conversation subtitles on your phone, instant translation and so on. We simply don't have that yet, those features do exist but they are power hungry (so if you do use them say goodbye to your battery life).