this post was submitted on 04 Aug 2024

238 points (99.2% liked)

memes

22793 readers

607 users here now

dank memes

Rules:

-

All posts must be memes and follow a general meme setup.

-

No unedited webcomics.

-

Someone saying something funny or cringe on twitter/tumblr/reddit/etc. is not a meme. Post that stuff in /c/slop

-

Va*sh posting is haram and will be removed.

-

Follow the code of conduct.

-

Tag OC at the end of your title and we'll probably pin it for a while if we see it.

-

Recent reposts might be removed.

-

No anti-natalism memes. See: Eco-fascism Primer

founded 4 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

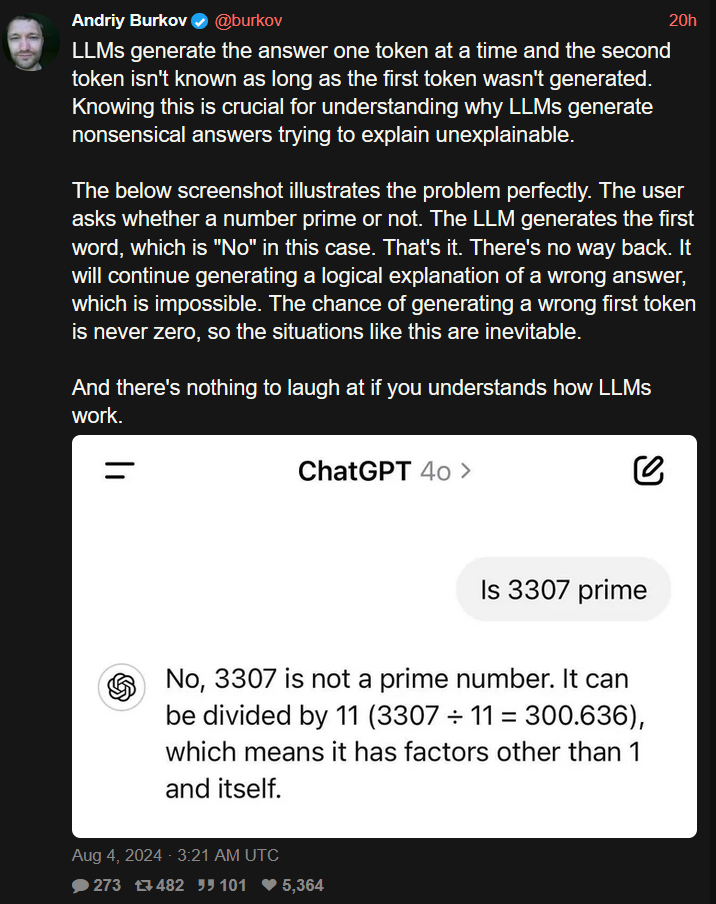

There are chain of thought and tree of thought approaches and maybe even more. From what I understand it generates answer in several passes and even with smaller models you can get better results.

However it is funny how AI (LLMs) is heavily marketed as a thing that will make many jobs obsolete and/or will take over humanity. Yet to get any meaningful results people start to build whole pipelines around LLMs, probably even using several models for different tasks. I also read a little about retrieval augmented generation (RAG) and apparently it has a lot of caveats in terms of what data can and can not be successfully extracted, data should be chunked to fit into the context and yet retain all the valuable information and this problem does not have "one size fits all" solution.

Overall it feels like someone made black box (LLM), someone tried to use this black box to deal with the existing complexity, failed and started building another layer of complexity around the black box. So ultimately current AI adopters can find themselves with two complex entities at hand. And I find it kind of funny.